Design Considerations for Scaling WebSocket Server Horizontally With a Publish-Subscribe Pattern

If you have a medium.com membership, I would appreciate it if you read this article on medium.com instead to support me~ Thank You! 🚀

Intro

In my previous article, I wrote about designing and building a WebSocket server in a microservice architecture. Although the implementation works fine for a single instance of a WebSocket server, we will start facing issues when we try to scale up the number of WebSocket server instances (aka horizontal scaling). This article looks into the design considerations for scaling the WebSocket server using a publish-subscribe messaging pattern.

My WebSocket Server Series

- 01: Building WebSocket server in a microservice architecture

- 02: Design considerations for scaling WebSocket server horizontally with publish-subscribe pattern

- 03: Implement a scalable WebSocket server with Spring Boot, Redis Pub/Sub, and Redis Streams

- 04: TBA

What is Horizontal Scaling?

First, let’s try to understand why we need horizontal scaling. As our user base grows, the load on the server grows. And when the load grows, a single server will not be able to provide high performance for all the users. Hence, it is necessary to provide the capability to increase/decrease the number of servers whenever necessary to meet the user’s demand as well as to save resources as part of our design considerations.

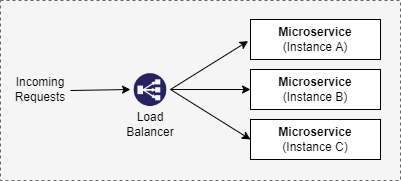

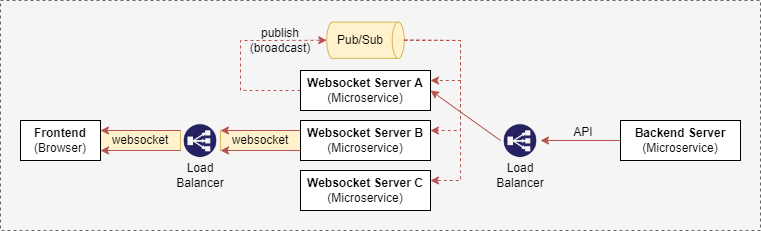

Horizontal scaling refers to adding more machines to your infrastructure to cope with the high demand on the server. In our microservice context, scaling horizontally is the same as deploying more instances of the microservice. A load balancer will then be required to distribute the traffic among the multiple microservice instances, as shown below:

With this, I hope you better understand why we need horizontal scaling in our infrastructure. So let’s move on to learn the design considerations for scaling WebSocket servers in a microservice architecture.

Quick Recap

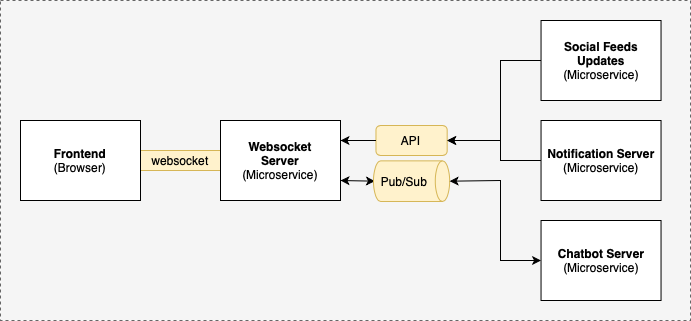

Previously, we implemented the WebSocket server using Spring Boot, Stomp, and Redis Pub/Sub. Communication between the web application (frontend) and WebSocket server is via WebSocket, while communication between the microservices (backend) and WebSocket server is via API and publish-subscribe messaging pattern. For more information, refer to the previous article.

What Are the Issues and Solutions?

The previous design works perfectly fine in a setup where we only have a single instance of each microservices. However, having a single instance is not practical in a production environment. Typically, we will deploy our microservices with multiple replicas (or instances) for high availability in a production environment. Therefore, when we try to horizontally scale the number of WebSocket servers (microservice) or backend microservices, we will notice the following problems.

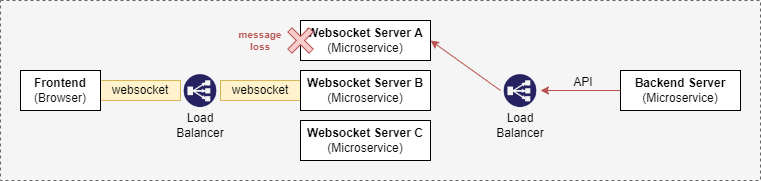

Issue #1: Message loss due to the load balancer

In our previous article, we added APIs for backend microservices to send messages to the WebSocket server for unidirectional real-time communication. As shown below, a load balancer helps to handle traffic redirection when scaling the number of WebSocket servers.

In the above setup, an instance of the web application (frontend) establishes a WebSocket connection to the WebSocket server (instance B). When the backend server tries to send messages to the web application, the load balancer redirects the API request to the WebSocket server (instance A). Since WebSocket server (instance A) does not have a WebSocket connection to that particular instance of the web application, the message will be lost.

Solution for Issue #1: Broadcast messages using Pub/Sub

Note: This solution is greatly inspired by Amr Saleh, who wrote about Building Scalable Facebook-like Notification using Server-Sent Events and Redis. Do check that out!

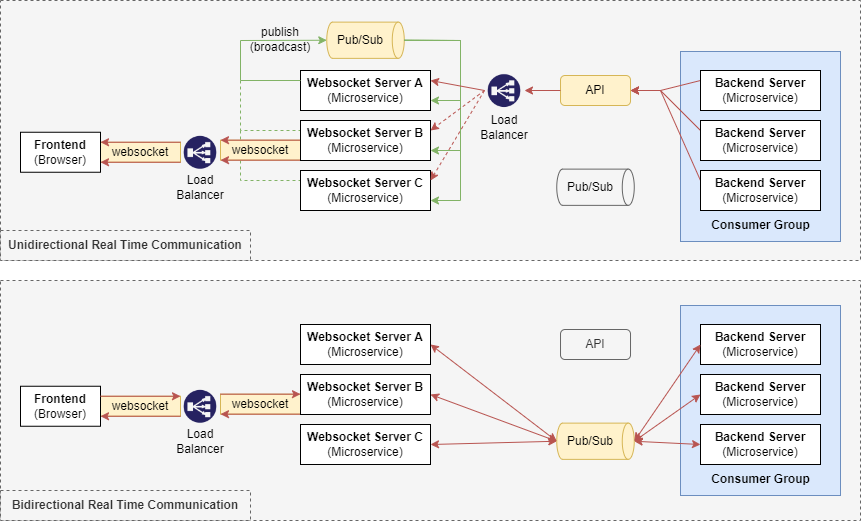

To resolve the first issue, we can introduce a broadcast channel using the publish-subscribe messaging pattern where all messages received from the backend microservices will be broadcasted to all WebSocket server instances as shown in the diagram above. This ensures that all web application instances (frontend) will receive that message via WebSocket from the WebSocket server.

Issue #2: Duplicate message processing due to multiple backend subscribers to a single topic

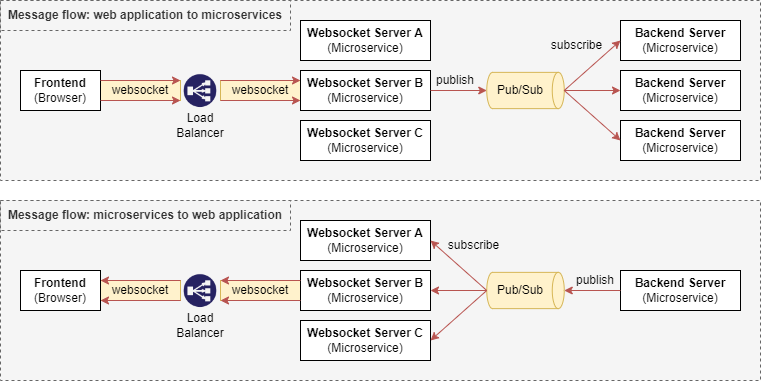

In our previous article, we used Redis Pub/Sub to handle bidirectional real-time communication between the WebSocket server (microservice) and backend microservices. When we scale up the number of WebSocket servers and backend microservices, you will notice that all subscribers to Redis Pub/Sub will receive the messages as shown below.

Let’s look at the message flow in each direction in bidirectional real-time communication.

- Message Flow: Microservices to web application (no duplicated processing) → It is necessary for all instances of the WebSocket server to receive the messages as each web browser establishes a WebSocket connection with only a single WebSocket server instance. Hence, when messages flow from the backend microservices to the web application (backend → WebSocket server → frontend), only one instance of the web application will receive the message, which is the correct behavior.

- Message Flow: Web application to microservices (duplicated processing) → When messages are flowing from the web application to the backend microservices (frontend → WebSocket server → backend), we would expect only one instance of the backend microservices to process the message. However, all backend microservices (as subscribers) will receive the message, resulting in the message being processed multiple times, which is incorrect behavior.

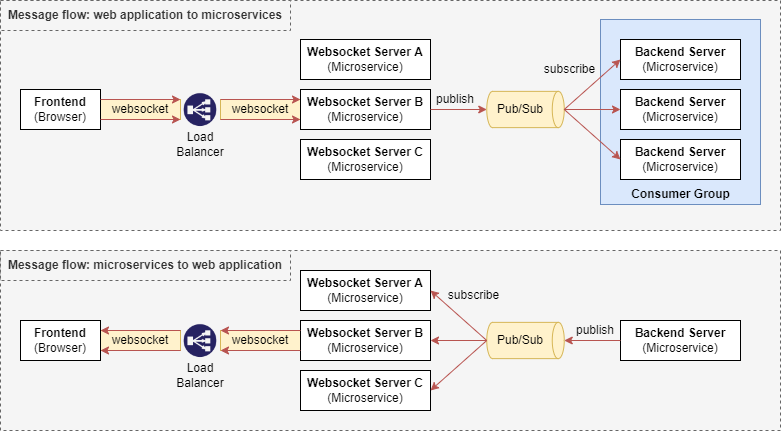

Solution for Issue #2: Pub/Sub with consumer groups

To resolve the second issue, we will make use of the concept of Consumer Groups (introduced by Kafka), where only one subscriber receives the message for processing. This ensures that there will be no duplicated message processing as only one backend microservice instance will receive the message.

As Redis Pub/Sub in my previous article implementation does not support the consumer group concept, we can either use Redis Streams, Google Pub/Sub, RabbitMQ, or Apache Kafka to implement the publish-subscribe messaging pattern with consumer groups. I will not go into details on which is better for your implementation as this is not the intent of this article.

Summary

To wrap things up, we have run through the design considerations on how to scale the WebSocket server in a microservice architecture horizontally. Essentially, we are using publish-subscribe messaging patterns to ensure that there is no message loss or duplicated message processing in the process of real-time communication between the web application (frontend) and microservices (backend).

That’s it! I hope you learned something new from this article. This article only covers the design considerations for scaling the WebSocket server. Stay tuned for the next one, where I will elaborate more on how you can implement this design using Redis Pub/Sub, Redis Streams, and Spring Boot.

Thank you for reading till the end! ☕

If you enjoyed this article and would like to support my work, feel free to buy me a coffee on Ko-fi. Your support helps me keep creating, and I truly appreciate it! 🙏